Softmax

Table of contents

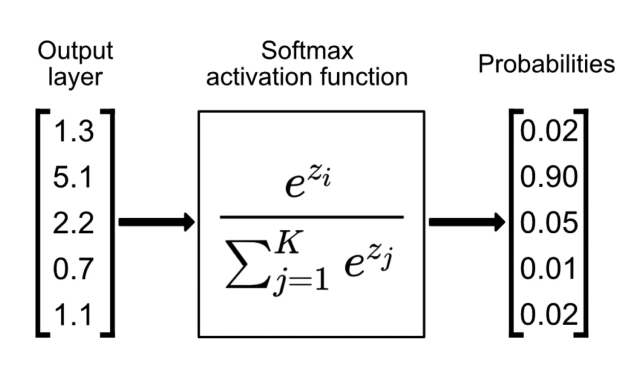

To Probabilities

Converts vector of logits to vector of probabilities.

Essentially takes a vector, takes the exponential of each entry, and then normalizes the entries so that they sum to 1.

- Max: because the largest logit gets amplified

Soft: because the other logits are suppressed but not hard-zeroed out.

Think of it as a softened version of the max function.

Computational Complexity

Let $K$ be the dimension of the input vector.

If $K$ is large, computing this every iteration can be computationally expensive.

Good Read: