Basic Time Series Preprocessing

Table of contents

Missing Data

It is quite common to have missing data due to the longitudinal nature of time series data.

Different types of missing data

Random

Could occur due to multiple reasons:

- Recording malfunction

- Human error

- Data corruption

- Unforeseen circumstances…

Systematic

Could occur due to multiple reasons:

- Defined events (i.e. holidays)

- Data collection process (i.e. only weekdays)

- Policy and regulations

- Basically on purpose…

Common ways to handle missing data

Imputation

Most common way to fix missing data.

Depending on the type of missing data (random or systematic), each following method shows different performance.

Whether you include future values in imputation also impacts performance, but needs caution due to lookahead.

Forward fill

Fill with the last known value before the missing value.

Pros:

- Computationally cheap

- Can be easily applied to live-streamed data

Cons:

- Susceptible to introducing unwanted noise / outliers

Backward fill goes the opposite way. However, since it is a case of lookahead, it should not be used for prediction training and be used only if it makes sense via domain knowledge.

Moving average (MA)

Fill with the average of some window of values near the missing value.

Calculation is not limited to arithmetic mean. It can be weighted, geometric, etc.

It is also called rolling mean.

Whether the window should include future values is up to discretion. Including future values is a case of lookahead, but it does improve the estimation of the missing value.

One suggestion is to include future values for visualization and exploration, but exclude them for prediction training.

Pros:

- Good for noisy data

Cons:

- Moving average reduces variance. So it must be kept in mind when evaluating model accuracy with $R^2$ or other error statistics because it leads to overestimation of model performance.

Using total mean to fill missing values is often not a good choice for time series data although it is common for some other data analysis. Also, it is again a case of a lookahead.

Interpolation

Using nearby neighbors to decide how missing point should behave.

What defines a neighbor is up to decision.

For example, linear interpolation uses neighbors to constrain missing point to a linear fit.

Just like moving average, you want to decide whether to include future values in imputation.

Pros:

- Interpolation is especially useful if you know how the data behaves or prior knowledge about the data (e.g. trend, seasonality).

Cons:

- If you don’t have prior knowledge or it doesn’t make sense to expect a known behavior, it’s not very helpful.

When is interpolation better than moving average? As previously mentioned, interpolation is useful if you have prior knowledge. For example if you know there is an upward trend, using moving average will systematically underestimate the missing value. Interpolation can avoid this.

Delete data with missing values

Aside from imputation, you could also decide not to use portions of data with missing values.

It is not desirable because you would lose that much data, but it is a valid option if you have to patch up too many missing values.

Downsampling and Upsampling

Downsampling

Reasons to downsample

Redundant recordings

Redundant recordings with not much new information takes up storage space and processing time.

Possible downsampling method is to select every $n$th data point.

Focus on a specific time scale

For data with seasonal cycle, you may want to downsample to certain months (i.e. only January) to focus on the season of interest for your analysis.

Match frequency to other data

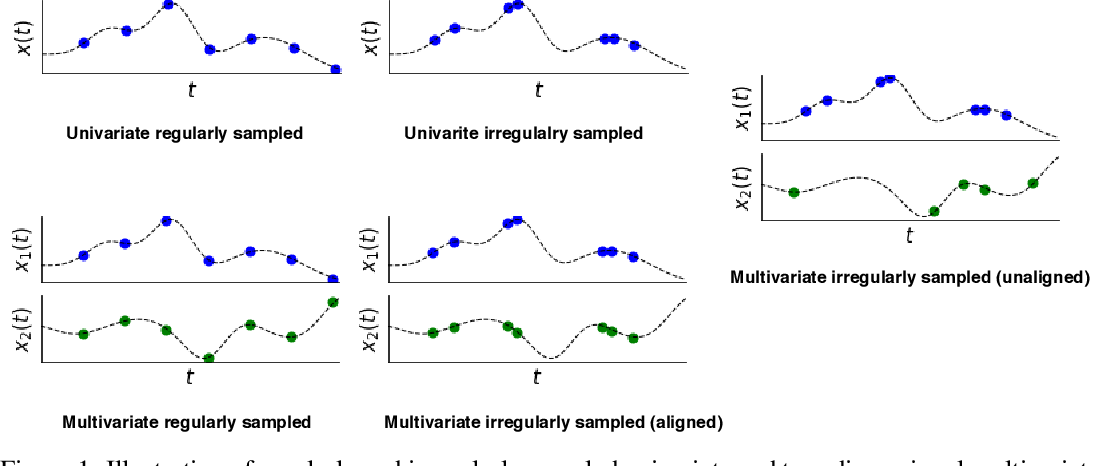

If you have multiple time series data, analysis becomes difficult if they are recorded at varying frequencies.

So you may choose to downsample to the lower frequency among the data.

Rather than simply selecting and dropping points, it is better to perform aggregation (i.e. mean, sum, weighted mean etc.).

Upsampling

This is not exactly the opposite of downsampling, in the sense that you can’t really create new data points out of thin air.

However, you can choose to label/timestamp data at a higher frequency than the original recording frequency.

Beware of creating lookahead when upsampling.

Reasons to upsample

Irregularly sampled data

If you want to convert irregularly sampled data to regularly sampled data, you can upsample the data by upsampling the lags between data points.

To match frequency to other data

Same with downsampling, you may upsample to align data points.

Prior knowledge about data

If you have prior knowledge about the data and you intend to upsample, you can use interpolation just like imputation.

This would actually be in some sense “creating new data” unlike other upsampling methods.

Smoothing Data

Smoothing is related to imputing missing data, so some of the methods (like moving average) apply when smoothing data. In addition, you must be cautious about lookahead just like imputation.

It is a good idea to check that smoothing does not compromise any assumptions your model may have (i.e. model assumes noisy data).

Reasons to smooth data

Visualization

- Most trivial reason

- To understand the data better before analysis

Data preparation

- Eliminate noise and outliers (i.e. via moving average).

- Remove seasonality

Feature generation

- In order to effectively summarize data, you may want to smooth or essentially simplify the data to a lower dimension or a less complex form to generate characteristic features.

Prediction

Simplest form of prediction comes from smoothing data.

Mean reversion

Mean reversion is a financial theory that states that even though price seems to deviate in the short term, it eventually remains close to its long-term mean.

So if you smooth a wave-like time series data, you would get a line and you could predict that future price will remain near that line.

Methods to smooth data

Moving average

Exponential smoothing

It is similar to a weighted moving average, but it differs in that it gives more weight to recent data points and less weight to older data points.

It also differs from moving average in that it uses all past data points (aggregated in a single forecast value) unlike moving average which uses only a window of data points.

The simplest form of exponential smoothing is as follows:

Given some smoothing factor $\alpha$, the simplest smoothed value $S_t$ at time $t$ is defined as:

$$ \begin{cases} S_0 = y_0 \\[1em] S_t = \alpha y_t + (1 - \alpha) S_{t-1} \end{cases} $$

With direct substitution, this recursion expands to weights of

\[1, 1 - \alpha, (1 - \alpha)^2, (1 - \alpha)^3, \dots\]And past values are associated with higher order weights making them less significant.

Hence the name exponential smoothing.

Simple exponential smoothing doesn’t work well on data with a trend. Use Holt’s Method for data with trend and Holt-Winters for data with trend and seasonality.

Other methods

- Kalman filter

- LOESS (locally estimated scatterplot smoothing)

Both are more computationally expensive and are cases of lookahead because they incorporate both past and future values.

Dealing with Seasonality

Beware of Time Zones

Not much to say here, but beware of time zones because time zone, daylight savings, etc. can be a pain.

Beware of Lookahead

During training or evaluation of a model, it is important to avoid using data that would not have been available at the time of the prediction. (If you already know the future, the prediction is gonna be easy, but that’s not what we want to do.)

Any knowledge of the future used in a model is called lookahead.

If you have a lookahead you would find tht your model does not perform as well in real life as it did in training.

Lookahead does not only pertain to calendar time.

Some preprocessing steps such as imputing or smoothing may introduce lookahead, so you want to make sure if this is desirable for your purpose and make sure it is not used for prediction training.