Angles and Orthogonality

Table of contents

Angle Between Vectors

Cauchy-Schwarz Inequality states that induced norms satisfy the following inequality:

\[|\langle\mathbf{x},\mathbf{y}\rangle| \leq \|\mathbf{x}\| \|\mathbf{y}\|\]From this inequality, we can derive the following:

\[-1 \leq \frac{\langle\mathbf{x},\mathbf{y}\rangle}{\|\mathbf{x}\| \|\mathbf{y}\|} \leq 1\]Remember that for $\omega \in [0, \pi]$, $\cos \omega$ ranges from $-1$ to $1$.

So there exists $\omega \in [0, \pi]$ such that

$$ \cos \omega = \frac{\langle\mathbf{x},\mathbf{y}\rangle}{\|\mathbf{x}\| \|\mathbf{y}\|} $$

When our inner product is the dot product, this $\omega$ is the geometric (or Euclidean) angle between $\mathbf{x}$ and $\mathbf{y}$.

Rotation Matrix

In $\mathbb{R}^2$, the rotation matrix to rotate a vector by $\theta$ is:

$$ \begin{bmatrix} \cos \theta & -\sin \theta \\ \sin \theta & \cos \theta \end{bmatrix} $$

Think of it this way

We have two standard basis vectors $\mathbf{e}_1 = [1, 0]^T$ and $\mathbf{e}_2 = [0, 1]^T$.

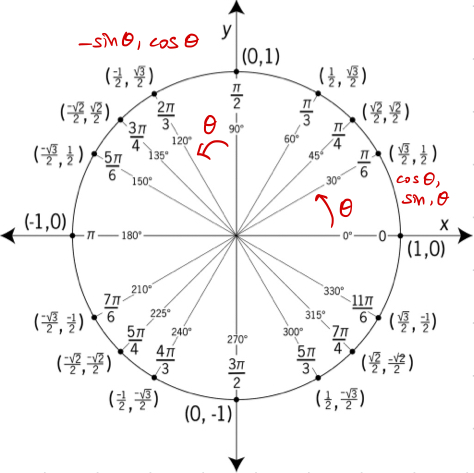

Think of the unit circle.

After rotating $\mathbf{e}_1$ (the $0$ or $2\pi$ point) by $\theta$ counter-clockwise, it should land on $[\cos \theta, \sin \theta]^T$.

Similarly, after rotating $\mathbf{e}_2$ ($\frac{\pi}{2}$ mark) by $\theta$, we should be at $[-\sin \theta, \cos \theta]^T$.

Orthogonality

Two vectors $\mathbf{x}$ and $\mathbf{y}$ are orthogonal, denoted as $\mathbf{x} \perp \mathbf{y}$, if their inner product is zero:

$$ \langle\mathbf{x},\mathbf{y}\rangle = 0 \iff \mathbf{x} \perp \mathbf{y} $$

Zero vector is orthogonal to every vector.

Remember that orthogonality is respect to a specific inner product.

Orthonormal

In addition to being orthogonal, two vectors $\mathbf{x}$ and $\mathbf{y}$ are orthonormal if they are unit vectors:

$$ \langle\mathbf{x},\mathbf{y}\rangle = 0 \wedge \|\mathbf{x}\| = \|\mathbf{y}\| = 1 $$

Orthogonal Matrix

A square matrix $A$ is orthogonal if and only if its columns are orthonormal.

In other words,

$$ \mathbf{A}^T \mathbf{A} = \mathbf{I} = \mathbf{A} \mathbf{A}^T $$

By convention, we say orthogonal matrix, but it is actually orthonormal matrix.

Inverse of Orthogonal Matrix

The above definition implies that

$$ \mathbf{A}^{-1} = \mathbf{A}^T $$

Orthogonal Transformation

Suppose an orthogonal matrix $\mathbf{A}$ is a transformation matrix of some linear transformation.

Then, for any $\mathbf{x}, \mathbf{y} \in \mathbb{R}^n$, this transformation preserves the inner product and is called an orthogonal transformation:

$$ \langle\mathbf{A}\mathbf{x},\mathbf{A}\mathbf{y}\rangle = \langle\mathbf{x},\mathbf{y}\rangle $$

Because orthogonal transformation preserves the inner product, it also preserves the length of vectors and the angle between vectors.

$$ \|\mathbf{A}\mathbf{x}\| = \|\mathbf{x}\| \wedge \frac{\langle\mathbf{A}\mathbf{x},\mathbf{A}\mathbf{y}\rangle} {\|\mathbf{A}\mathbf{x}\| \|\mathbf{A}\mathbf{y}\|} = \frac{\langle\mathbf{x},\mathbf{y}\rangle} {\|\mathbf{x}\| \|\mathbf{y}\|} $$

Determinant of Orthogonal Matrix

If $A$ is an orthogonal matrix, its determinant is:

$$ \lvert\det(\mathbf{A})\rvert = 1 $$

Why is the absolute value 1?

\[\begin{align*} \det(I) &= \det(A^\top A) \tag*{Definition of orthogonal matrix} \\ &= \det(A^\top)\det(A) \tag*{Multiplicativity of determinant} \\ &= \det(A)\det(A) \tag*{Property of determinant} \\ &= 1 \tag*{Determinant of identity matrix} \end{align*}\]Therefore, $\det(A) = \pm 1$.

Orthonormal Basis

Review the concepts of basis here.

Let $V$ be an n-dimensional inner product space with basis $B = \{\mathbf{b}_1, \dots, \mathbf{b}_n\}$.

$B$ is an orthonormal basis if:

$$ \begin{cases} \langle\mathbf{b}_i, \mathbf{b}_j\rangle = 0 & \text{if } i \neq j \\[1em] \langle\mathbf{b}_i, \mathbf{b}_i\rangle = 1 & (\|\mathbf{b}_i\| = 1) \end{cases} $$

If only the first condition is satisfied, then $B$ is an orthogonal basis.

Finding Orthonormal Basis

Given a set of non-orthonormal basis vectors $\tilde{B}$, we can construct an orthonormal basis $B$ by performing Gaussian elimination on

\[[\tilde{B} \tilde{B}^T \mid \tilde{B}]\]